Browsing the internet without uBlockbOrigin is bad for your health.

Raymond Hill is a hero of our times. Not even kidding.

*googles the name*

Raymond Earl Hill was an American tenor saxophonist and singer, best known as a member of Ike Turner’s Kings of Rhythm in the 1950s.

Well, I mean we all have our own ideas about the world.

True, true

Ad blockers don’t protect you against dumbass frontend devs who serve 5mb png files to be stuffed into 600x400 boxes.

I especially hated wallpaper website that load full size pictures on previews grids

Removed by mod

Is 50kb enough or should I go higher.

Also. Thanks!

Removed by mod

I have mine at 50kb, and most things load, but I do get some images that don’t. Just play with it and increase it until the frequency you need to tap pictures to render them doesn’t piss you off anymore.

Thanks kind stranger, I didn’t realize this was configurable.

Shit, I might use that on a desktop with broadband.

My simple home page is 10 KB now. And you might not think that’s such a big deal, but it has more content than Google’s search page and that rings in at a couple MB IIRC. 😁

How do I measure how much data my page loads? Now I’m curious

Chrome reports the memory a tab uses if you hover over the tab. Look at the task manager within your browser. Try clicking on the burger bar, then “More tools” and “Task Manager” within the browser.

If you can’t answer this question you’re doing it wrong. It should be as simple as “how large are the files in my web hosting folder”. All this fucking tech stack bloat is so unnecessary.

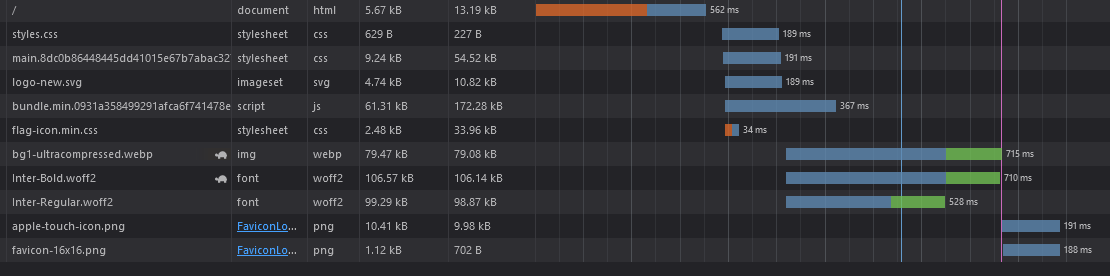

- Press F12 to open the Debugger.

- Click the “Network” tab

- Press Ctrl+F5 to reaload the whole page (including previously cached files)

- under the list of transfered files in the greyish bar above the debug console (if enabled…) you see the total number of requests the site made and the total filesize that has been transfered; lower is better.

Picture: https://superuser.com/a/1718133

Thank you for the detailed answer!

On the contrary! I absolutely loathe how bloated webpages have become over the last few decades, so it’s very refreshing and laudable to see a webpage that tries to keep itself as small as possible.

I have been just bewildered at the proliferation of excessive scripts and garbage on seemingly every webpage over the last decade. I’m no web-dev, but I’m pretty positive that the vast majority of websites could remove 99-some percent of their javascript bs and their websites would function just fine. So many are pretty much unusable these days. It’s atrocious.

I’m a web dev and yes they could. It’s annoying that web devs get blamed for it though, the reason for all the javascript is mostly business decisions out of our control.

Mainly the tracking scripts which the marketing department adds against out will. But also it’s a lot cheaper to have a client-rendered web app than a traditional website (with client side rendering you can shut off all your web servers and just keep the api servers, our server side processing went down 90% in the switchover). And it’s more efficient for the company to have one team working in one programming language and one framework that can run the backend and frontend, so the frontend ends being a web app even if it’s not really necessary.

Fwiw, I don’t blame the devs. That’s just me saying I’m not an expert. I understand it’s a management/corporate decision.

And thanks for the explanation. That clarifies the changes I’ve been noticing.

A bunch of websites operating as web apps would help explain the bloat. Great idea if somebody is navigating a good chunk of your website. Horrible idea if 99% of your traffic is people being linked to a news article and then leaving afterwards.

I’ve been working at organizing a bunch of stuff I’ve been collecting over the years … data, writing, lists, ideas, whatever … I kept using all sorts of services, apps, websites, cloud services and all sorts of crap to maintain them all but eventually it all becomes too complicated and breaks down.

I’ve since discovered just using simple text files and services that just use simple text mark down … no special service, nothing proprietary, easily transferable and interoperable.

I started looking at websites the same way … I don’t care what it looks like, I just want to read the information … you made it too hard for me to read your simple text info? You’re asking me to turn off my ad blockers and turn on Java script? All to read 200 words on your site? I’ll skip it and move on to the next site that will allow me.

I manage a web dev team. We try to optimise as much as possible but then there’s all sorts of tracking that gets tacked on by personalisation teams, opti teams, things like Tik Tok, Facebook, Twitter/X scripts inserted too… It’s pretty shit. And sometimes when things break it makes it super hard to debug too

REACT EVERYTHING

I made a stupid little page that downloads a Pathfinder 2e SRD API, and then randomly combines an ancestry, background, and class from that list and displays it on screen. It’s really nothing special, I hacked it together in an afternoon. But I showed it to a friend and they were blown away that I didn’t use a framework for it. I was like, “it does three things. Why would it need a framework? What would I even use a framework for?”

They still couldn’t believe I did it by hand.

I’ve chatted with a few experienced web devs, and from what I’ve heard, there’s a whole group of “web programmers” out there that just learn React and other fameworks, but don’t actually know how to code anything themselves. So many places won’t even consider you if you don’t know React.

And here I am still thinking jQuery is an excessive amount of page bloat.

This is accurate. I’m a full stack dev, and a huge number of job postings I’ve seen over the past ten years or so have switched to React.

I recommend to use an adblocker. It’s not a moral question anymore but pure self-defence, says multiple US secret services.

Don’t worry, a new internet is coming soon. Then we can leave all this behind.

We can’t even adopt IPv6 properly, let alone implement and migrate to a new and improved internet.

You mean Web3? Yeah Web3 is going to do jack shit to solve this, if anything it’ll make it worse

No, I don’t mean web3

Then what? Implants from musk? Or all audio like podcasts for everything (which is also not better whatever the marketing says).

Maybe they’re talking about the fediverse or something? Idk.

Maybe Piper Net (Silicon Valley)

I think about that show surprisingly often and how amazing a compression method like that would be right now. Our internet and storage speeds have not remotely kept up with the rapidly expanding size of files these days.

Guess where they got their inspiration from?

https://spectrum.ieee.org/where-is-hbo-silicon-valleys-real-pied-piper-look-in-troon-scotland

AKA the new internet (the SAFE network) that has been in development for 18 years and is about to hit beta here in the upcoming weeks.

Piper Net sitting on a couch surrounded by BBC (Big Bastard Companies)

Thats mostly because of the overload quantity of ads, trackers, plugins, integrations, etc all websites have now. Using an adblocker halves your bandwidth usage. If you have a data cap, an adblocker is a must.

And then, optimization. As an Angular developer, knowing many websites nowadays are Angular or similar, the lack of optimization is a big problem. Most don’t even use lazy loading, not to mention managing the module imports into different components. They import everything into the main component and don’t do lazy loading leading you to websites that have 20-40MB (!!!) of initial load (when you open the website). This is so common that I think junior angular devs will slowly just kill angular popularity and give it a bad look. Takes work to optimize Angular, and many devs don’t care enough and just rush it. And then there are companies that don’t understand that web frameworks need optimization and just underpay devs or rush the dev time.

Please don’t use Angular (or similar complex web frameworks like Vue or React) if you don’t know how to correctly optimize it, or don’t have time or care for it. And don’t overload your pages with ads and integrations. You are ruining the web.

My old project I got to architect the frontend ran lean at around 300KB - part of our target audience had older phones so it was designed with that in mind.

At my new job 22MB is child’s play. To be fair they might do it better with the next version.

Similar to me. Previous job we tried our best to squeeze any ounce of optimisation out of it. Mainly because I was on the SEO team and we had to focus on the core web vitals. Everything was deferred and every image was optimised.

New job, we don’t even have any metrics.

Wet Ass Pussy is clearly the answer.

I have a WAP phone, and to my surprise google loads

I’m delighted. I wonder if they still employ one lone engineer with the title “WAP Architect” :-)

I’d hazard a guess and say it all stems from advancements in tech. There was a need to get the most out of something because of limited resources. Now that everyone’s got some fairly serious hardware (yes, even the cheap shit), there’s rarely that urge to optimize.

Rather than optimize each new technology as it comes along and gets adopted, it seems as though the mantra is “fuck it, add it to the pile”. And it snowballs. As developers feel the need to optimize less, the lessons get passed down to the next generation, and so on.

So we’re left with apps/end-user stuff that appear to have been on the opposite of a diet.

You see, stuff like this is why I never understood the wave of “Android Go” and “Lite/Go” apps a couple of years ago.

On my old low end phone, the native Twitter app ran infinitely better than the Web based “Twitter Lite”. This applied to almost every “Lite” app compared to their regular versions.

I feel like whoever started that “Webapps are great for low end” concept never actually tried to run a modern Webapp on a slow phone.Edit: My comment is focused mostly on the push of Webapps on low end phones. I’m sure there are great, proper “Lite Apps”, and I quite like the idea of Android Go, I just think the implementation missed the mark and that a lot of companies pushed out a crappy, poorly thought out webview just to cash in the “Lite” trend without caring about the end user.

Of course an app that is compiled ahead of time to run natively on the cpu would run faster than a web app that compiles it bloated JavaScript code on the fly.

The web app versions was to avoid having to download large apps, not to be faster. They are slow because the companies tried to have feature parity with the native app and also stuffed it with tracker software. Web apps are supposed to barebones.

The lite apps also take up less user storage. Which was a big issue for lower end phones at that time. Once you ran out of storage people struggled to install new apps. Even with external SD cards, as it wasn’t an easy concept for some people to get over.

I only ever used the lite version of FB Messenger. Shit was much better than the full version, especially without all the bloated “features” that I didn’t use at best and being annoying/battery drains at worst. Was noticeably snappier on both my old and new phones. Fortunately most of my friends started using Discord and/or Signal with better features (and one less Meta app to have running).

I think that the idea of having smaller and less demanding versions of lots of apps is a good idea. As so many apps are just not optimized and bloated. Just being coded to rely on higher specs to make up for said lack of effort in cleaning up stuff. The ads on ads on ads being part of the issue as well. Which is only getting worse with the close buttons not loading unless shit has been however many seconds. Seems that the “hit box” for the close buttons is getting smaller and smaller to guaranty the ads are clicked on and then open another app or a browser. Though optimizations and better coding won’t fix dirty underhanded grifts.

I think that the idea of having smaller and less demanding versions of lots of apps is a good idea.

I think that too!

I’m just not sure Webapps are the way to go about this over native, smaller, leaner apps.

if you watch steve jobs’ 2007 iphone keynote it’s incredibly depressing now. he brags about how the iphone can load full, rich webpages instead of awful mobile versions; he loads the NYT website and gets the whole lush landscape desktop version, and taps to zoom in on certain elements. i used to be such a dork and so into tech in high school, it seemed so promising and wondrous.

i bet jobs could’ve yelled at spez about the API changes and gotten him to relent

i bet jobs could’ve yelled at spez about the API changes and gotten him to relent

Why would Jobs care? Reddit’s app goes through the app store, Apple gets a cut of any premium users buy on it.

And why would Spez relent to Jobs? Everything Spez is doing is to get maximum payout from the IPO and then cash out. He doesn’t give a shit about the actual site anymore.

the guy that was convinced apples could cure his cancer?

“Full rich webpages” on a 2007 iPhone meant bare HTML and a kilobyte of Javascript. Anything fancy would be in Flash because JS was slow as balls, and the iPhone never ran Flash.

If we’re counting now and into the future, the EU has coerced them to finally tolerate other browsers.

… not that I’m aware of any current browser with Flash support.

Ruffle gives it support, no EU good-intention-poor-implementation regulation required. The demo link I shared above works with any browser, built in Safari included.

Oh I know, I was just suggesting more-direct support was possible. Genuine stupid coverage for a long-dead plugin.

Maybe someone could coerce Dolphin browser from Android to iOS.

I do have to say, Ruffle is the most boringly-named of the “let’s do Flash in JS” projects. The first big one was named Gordon, in an obvious pun. The follow-up was named Shumway, in a less-obvious pun. About ALF.

Speaking of historical Flash support, I actually forgot the old Puffin Browser which I’ve bought back in 2011, and apparently is still around. They run a browser on their server and you get a VNC-like client to access that instance. So by no means native support, but it was super functional at least back in the days — haven’t used it for years since I stopped buying iPads as my use case are better suited for the Mac and the iPhone instead.

That is dedication I absolutely would not match. I bought Android for software freedom and mmmight have watched some pivotal Homestuck animations on a Droid 2 Global.

Even now, please don’t give Apple money.

I ended up using a static site generator for my personal site because I fucking hate JS and frameworks and WebComponents. The front page is 646 KB and it loads in 4 seconds. I’d love for it to be 1 second or less, but the fonts are a factor.

And I shrunk the shit out of that background too with pngcrush so miss me with that.

My front page is 613KB with Wordpress. Moral of the story, you don’t have to use a static website generator to have light things.

Check out https://250kb.club all performance sites focused on speed and small size.

Or maybe the 512kb.club a more reasonable balance between 250 club and the 1mb club.

Also with a view: jankfree.org for a similar focus on performance.

Can I achieve the same with vue.js or flutter? I need to learn this

And how do you plan to manage your posts, database etc. and render stuff in those? You still need some backend solution like Wordpress, you can use vue as a frontend library for it… or vanilla JS, or jQuery…

Ah, for that I’ll just dump some fast API or flask thing. Vue or flutter will just handle the front end

So… you are aware that FastAPI and Flask will always be significantly slower than Wordpress… because Python, always running processes etc.?

You’re building a simple website / blog just use Wordpress, it will output most of the pages into plan simple and fast HTML, then add a few pieces of vanilla JS or Vue (if you’re into that) to make things “fluffier”. Why bother with constant XHR requests when you’re just serving simple text pages?

With Wordpress you’ll also get all the management, roles, permissions, backend for “free” and you can always, like sane people, cache the output of the most visited pages. Wordpress also provides a RESTful API if required.

Loaded pretty much instantaneously on my phone (a second at most). Then again, I block third party fonts.

Honestly, 4 seconds is really slow, especially with static HTML. I built my first companies’ site myself, it includes a video on the front page and jquery, is built by PHP, and on descent Internet connections the front page will load in slightly over a second, other pages dip under that.

There are loads of tweaks you can make to -any- site, and total amount of bytes really isn’t the only speed factor here.

, but the fonts are a factor.

I’m not sure if the possibility is there depending on your use case (eg.: you are exporting the fonts) nor if the cost of doing it would be worth the shot, but you can send minified

versionsvariants of fonts, too.I have a pixel 6 and notice some lag in scrolling. Could it be that you don’t use srcsets but instead huge screenshots no matter the device screen?

Not that you’d want to because you hate JS and web components and all that, and there’s nothing wrong with your website, but NextJS supports Static Site generation.

So, JS and frameworks and webcomponents can get the job done for simple stuff nowadays. My portfolio page has a load time of 631 ms using the SSG built into NextJS, and its really similar to your website.

The entire Material Design framework in JS and Web Components in 80kb

https://clshortfuse.github.io/materialdesignweb/components/buttons.html

JS and Web Components are not the problem. Poor design is.

I love all your replies.

You wouldn’t get these responses from stackoverflow.

This isn’t even a programming or development community…it’s a general interest one.

You didn’t even ask for help.

I gotta say I came in here to flex and I learned so much. I am going to roll some of these changes really soon once I find out where to best add them to my Hugo template. I’m going to reply to some of them below to clarify some things:

It may be worthwhile to experiment with adding some preload links to the html template? or output? like below and assessing if it makes things faster for you.

This is the most interesting because I didn’t even know this was possible with HTML5, so I want to add this right away.

I have a pixel 6 and notice some lag in scrolling. Could it be that you don’t use srcsets but instead huge screenshots no matter the device screen?

The background is a large image in the CSS via background-image, I don’t know how easy it would be to change it to a srcset but I will give it a shot

The fonts can be loaded from another file that ends in the cache, lowering load time next time.

At the very least they need to load last because they are the largest burden

Haven’t done this type of optimizing in a long time, I had a quick look at the network graph for your front page (F12 dev tools in desktop browser), my understanding is it looks like you are getting blocked from loading additional resources (fonts + background) until your style sheets are fully read --pink line is document loaded i believe.

It may be worthwhile to experiment with adding some preload links to the html template? or output? like below and assessing if it makes things faster for you.

<link rel="preload" as="image" href="https://volcanolair.co/img/bg1-ultracompressed.webp" fetchpriority="high"><link rel="preload" as="font" href="https://volcanolair.co/fonts/Inter-Regular.woff2"><link rel="preload" as="font" href="https://volcanolair.co/fonts/Inter-Bold.woff2"> ___

___The fonts can be loaded from another file that ends in the cache, lowering load time next time.

See also The Website Obesity Crisis, nearly a decade ago.

Here’s an article on GigaOm from 2012 titled “The Growing Epidemic of Page Bloat”. It warns that the average web page is over a megabyte in size.

The article itself is 1.8 megabytes long.

The problem with picking any particular size as a threshold is that it encourages us to define deviancy down. Today’s egregiously bloated site becomes tomorrow’s typical page, and next year’s elegantly slim design.

The author links their tweet saying “your website should not exceed in file size the major works of Russian literature.” At the time, that page on Twitter was 900 KB. Today it is 11 MB.

And a lot of that is tracking nonsense.

I work on a full blown web app, and we’re about 11 MB (will look into trimming the fat). We have features like PDF report generation, 2D drawing, and fairly heavy algorithms relevant to our industry. We have thousands of Typescript files, and something like 500k+ lines of code. We also have lots of SVGs for icons, canvas stickers, etc.

So after all that, we’re about the size of an average Twitter/X page. Those are not the same order of magnitude in complexity…

And a lot of that is tracking nonsense.

That’s in the slides. It’s one of my favorites:

I always think it’s unfair to compare things to video games. Video games are so inefficient they had to invent a separate processor with hundreds of cores just to run them. Of course they end up running well.

If cheap phones had a 128-core JavaScript Processing Unit, websites would probably run fast too.

separate processor with hundreds of cores

Well, graphics rendering is very suited for parallelism. That’s why GPUs were invented.

Most other tasks are not. Most of the cores in a 128-core JPU would end up being unused. Also why JPU? It’s not like it’s significantly different from a normal CPU task.

I don’t think the person you replied to actually knows what they’re talking about.

Also no one gives a shit when a game download is 100MB, but more than 1MB is unacceptable for a website.

Imagine that, people don’t mind when they have to wait 10-15 minutes every once in a while for their game to update, but they do mind waiting 15-30 seconds every time they navigate to a new webpage.

- I have a limited amount of bandwith on my mobile plan

- if i know a game download is large i go home where i have broadband; i dont download large files over mobile internet

- a text-only website can be rather small with only a few KB. It’s only when you get ““Designers”” that things start to bloat, because the system fonts are not good enough and 2MB in extra fonts no sane visitor will ever notice must be downloaded.

- the marketing department REALLY needs those 10 extra trackers and analytics scripts that take 5s to load, even if they last looked at the visitor stats back in 2021 and the login has long been forgotten.

- the CEO wanted that animated AI powered talking gorilla widget he had seen on a local tradeshow where the customers can ask product or website questions (spoiler: they dont!), which ads a few more Megabytes to each pageload even before you even use it.

Then again, those 100 MB are usually mostly assets I want to look at or listen to. Certain websites contain 100 kB of text and pictures I want to look at and load 2 MB of JavaScript frameworks that add nothing to the usability of the site. Bonus points for automatically streaming a 20 MB video I don’t want to watch while I look for one sentence’s worth of information.

Video game developer here. A lot of anti-optimiation sentiment are just excuses and/or part of some dumb trend.

Oh no, compiled languages require you to choose between variable types! Better use Javascript.

Why should we develop a proper portable app environment when we have Electron? It can even run in browsers. Imagine if you didn’t had to go to your pops to install the word processor, instead he just types in wordprocessor dot app into the browser?

What if code was so easy to understand you didn’t had to document it, and each macroblock of a function instead were a named function, so they’d be automatically documented?

And this is just the tip of the iceberg. I’m currently writing my own scripting VM, as most others have their own limitations, and would introduce a barely usable build system to my game engine (which are their own can of worms). Code as data is a very useful feature, but having to include DLL files as scripts would be very complicated due to platform differences, although also very fast. Issue comes when people treat scripting languages as full-fledged programming languages, and even scaring away beginners from compiled languages, because you have to compile them, you have to choose a type, etc.

I just want to point out that interpreted languages don’t have to be slow. For example, LuaJIT is competitive with Java in terms of performance, and not that much slower than C. Likewise, PyPy is almost always consistently faster than CPython, and Python 3.13 will have a JIT. I’ve also used numba to improve performance in Python (got close enough to naive Rust to not be worth adding Rust to our pipeline).

If you want scripting languages to be fast, there are options, so the decision should instead be made based on the benefits of each. For example:

- scripting languages - generally better edit/reload experience, write once, run anywhere an interpreter exists

- compiled languages - catch common errors before running, lots of fixes for various platforms

I’m super interested in Rust because it catches way more common errors than most compiled languages, so you’re getting a lot more value for that compile step. My day job is Python + Javascript, though I have nearly 10 years with Go and most of my personal projects use Rust these days, so I feel like I’m fairly experienced here.

just excuses and/or part of some dumb trend

I agree. There are good reasons to prefer scripting languages to compiled languages and vice versa, but most people don’t seem to decide based on those reasons, they often decide based on what’s easier to hire for, what they’re familiar with, or what’s already being used.

I’m super excited about Rust gaining traction because it’s basically the best case for a compiled language I’ve seen. Maybe it’ll revise the trend toward higher level languages and encourage a bit more provable correctness.

The real irony is that you can make games entirely with Javascript (no backend server needed) and I wouldn’t be surprised if some of those games, even with 3D rendering via three.js or babylonjs, performed better than certain websites

Original post is a much better read than this blogspam

Wealth beyond measure, sera.

Is harder to load than pubg a joke or an actual metric?

While reviews note that you can run PUBG and other 3D games with decent performance on a Tecno Spark 8C, this doesn’t mean that the device is fast enough to read posts on modern text-centric social media platforms or modern text-centric web forums. While 40fps is achievable in PUBG, we can easily see less than 0.4fps when scrolling on these sites.

ಠ▃ಠ

OH MY GOD IT LOADS SO MUCH FASTER

I’m all for reducing the size of webpages with garbage bloat but a little CSS for readability on this site would have gone a long way.

Ps. thanks for sauce

I don’t agree with him, but if you read the last appendix, this mf wrote half an essay on why he prefers to have basically no styling

It reads a lot better with Firefox’s reader mode.

Wow, first time I’ve used reader mode and it is awesome!

The Opera browser of old had a menu with custom styles (a few default plus you could add your own), I think it had one that converted to sans serif, that plus a columns width one would be perfect for this site

Modern Firefox has “Reader View” that does a similar thing. It’s just less customizable… because it’s modern Firefox.

Does a disservice to the color-coded table on this article, though.

The appendices of that post could use a rewrite. They read weird:

An example we’ve discussed before, is at a well-known, prestigious, startup that has a very left-leaning employee base, where everyone got rich, on a discussion about the covid stimulus checks, in a slack discussion, a well meaning progressive employee said that it was pointless because people would just use their stimulus checks to buy stock.

That reads like ChatGPT used reddit comments to flesh out the “article”.

B-B-BUT STORAGE’S CHEAP, BRAH!!!11! INTERNET’S FAST!!!11 CPUS ARE POWERFUL11!

Sorry, not everyone speaks rich and not everyone speaks poor